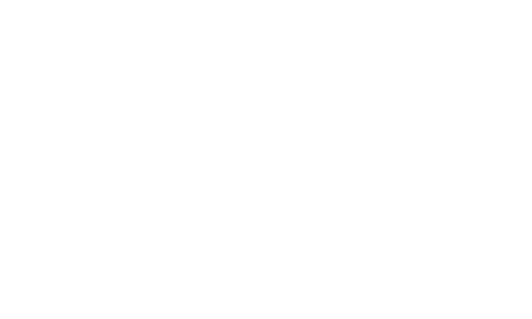

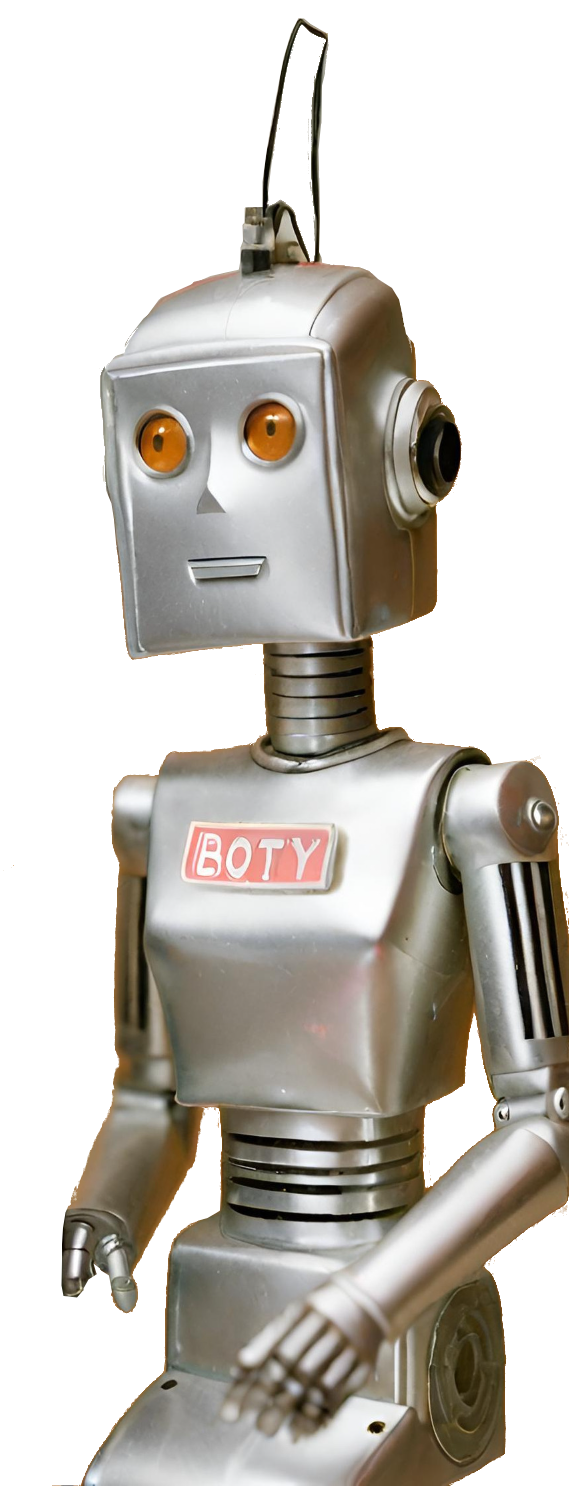

The Daily NOT is an online show based on the most recent fact checks by Lead Stories, hosted by robot hosts Artie Ficial and Botty McBotface. Artie’s jokes are (mostly) powered by ChatGPT and Botty gets her information straight from the latest Lead Stories’ fact checks (written by Actual Human Journalists™).

About The Daily NOT

The Daily NOT appears daily on the YouTube, Instagram, WhatsApp and TikTok channels of Lead Stories, as do short videos with each of the individual fact checks in the show. The fact check videos also appear on X and Threads.

Each fact check video is accompanied by a full article on the Lead Stories website that has the full details and all sources used to reach the conclusion. All content and research is done 100% by human fact checkers.

Lead Stories is a fact checking website founded in 2015 and is a verified signatory of the IFCN Code of Principles, part of Meta’s Third-Party Fact-Checking Partnership and part of ByteDance’s Third-Party Fact-Checking Program.

How does it work?

The Daily NOT consists of several short fact checking videos, a soundtrack and an intro/outro. The whole process of creating the show largely is automated. There are two major steps: first the short fact checking videos are generated and then they are bundled together into a daily show.

Short fact checking videos

Each fact check on the Lead Stories website follows the same basic structure as is explained in more detail on the “How We Work” page of the site.

The following elements of each fact check article are used for the videos:

- Headline (which usually negates the claim being fact checked)

- First paragraph, consisting of the claim as a question, the conclusion and a short summary of the fact check.

- The thumbnail image that goes with the fact check (most of the time this is a screenshot of the content being fact checked)

- The caption sticker (a very short summary of why there is a problem with the claim, which is superimposed over the thumbnail image on the website).

As a first step the content management system used for the Lead Stories website (Movable Type) generates a JSON file containing these elements. The first paragraph of the article is split into three parts using regular expressions: the question, the conclusion and the summary.

Movable Type also generates several “slides” in the form mini web pages each containing a combination of these elements. The background of the slides is formed by zooming, blurring and centering on the thumbnail image. Initially the thumbnail image also forms the center part of each slide. The slides are generated in a 1920x1080 pixel format because that ratio fits nicely on a phone screen.

The first slide contains the question, the second slide has the caption sticker and the headline, the third slide shows the headline and the conclusion sentence, the fourth slide shows the headline and the summary and the last slide shows a shortened link to the full fact check and an invitation to like and share.

These slides are then screenshotted using a headless version of the Chrome browser (meaning it can be controlled via the command line). This results in several png image files. Next, the question, conclusion and summary phrases are sent to Google’s Text To Speech AI API in order to generate mp3 audio files for each section.

Next, the slides are paired up with the audio files using the open source FFmpeg software to create a series of short video clips of the audio being played over the respective slide. The second slide (the one with the caption sticker on it) is paired with a sound effect from the Pixabay library and the last slide with a call to like and share the story.

The first slide gets one more modification to immediately grab the attention of viewers. There is an empty space at the top where in later slides the headline will appear. That space gets filled with an overlaid video zooming out from a “surprised face” and a blinking caption that reads “Fact check incoming” as the claim is being read out. The videos are based on a library of 64 possible “surprised face” images that were generated using Canva’s AI tool turned into short video clips with a zoom-out and a blinking text effect, again using FFmpeg.

Next all short clips are merged together into one long clip with FFmpeg’s “concat” function and music (again from Pixabay) is mixed in over the voice track. At the very start of the video a very brief snippet of one tenth of a second long is added that previews the second slide (the one with the headline and the caption sticker). This is done because by default the first frame becomes the thumbnail image on several of the platforms where videos can be uploaded.

If the content being fact checked happens to be a Tweet with a video, a YouTube video, a Facebook reel or another link recognized as a video the yt-dlp software tool is used to try and download the video so it can be overlaid in the center part of the layout of the final video. When this happens the part of the video that has the thumbnail image is blurred to make the overlaid video stand out more.

Next a small animation effect is added at the point in the video when the caption sticker appears. It consists of a thumbs-up, heart and star emoji floating upwards from positions around the sticker and fading out again. This is done by overlaying image files using FFmpeg’s fade, scale and alpha filters.

The very final step is to send the video file through OpenAI's Whisper API in order to get a transcription of the text with timestamps of the exact moment each word is spoken. This information is then used to create captions using ffmpeg's DrawText feature.

The result of this process is a finished .mp4 video file that can be uploaded to TikTok, YouTube Shorts, Instagram, WhatsApp, Threads and Twitter. In order to facilitate the uploading a final piece of software generates a ready made caption that can be copy pasted into the upload screens of these services. It shows the title of the fact check along with the URL and it uses ChatGPT to generate a list of suitable hashtags.

The show

At the end of each day all videos are turned into a mini-talk show with robot presenters Artie Ficial and Botty McBotface. The pictures of Artie and Botty were generated using Canva and the rest of the technology used to create the show is essentially the same as for the fact checking videos.

Because of the structure of the first paragraph of a typical Lead Stories fact checks it is also very easy to turn them into a dialogue:

- Question: Did thing happen?

- Answer: No, that's not true: Thing was a joke from a satire website.

- Reaction: Oh, I see!

- Details: The site in question has a disclaimer that reads ...

- Closing remark: Well, there you have it folks ...

The bold parts are already in the text of the fact check. The reactions come from a random list of phrases. For the closing remark we turned to ChatGPT.

Note: We did have to work on refining the prompt with enough safeguards to prevent inappropriate jokes when the topic of the fact check involves tragic events, diseases, deaths, etc. And of course you can't use ChatGPT-generated text before it is vetted and corrected (if needed) by humans, but that still is far less work than writing it all by hand.